Chapter 21 Using a testing set to evaluate the quality of a model

In this lab activity, we will do the following: - load a dataset - randomly divide the dataset into training and testing data - train a linear model and a regression tree using the training set - compute training error - compute testing error

21.1 Dependencies and setup

We’ll use the following packages in this lab activity:

library(tidyverse)

library(modelr)

library(rpart) # Used to build a regression tree

library(rpart.plot) # Used to visualize treesYou’ll need to install any packages that you don’t already have installed.

21.2 Loading and preprocessing the data

In this lab activity, we’ll be using the Seoul bike dataset from the UCI machine learning repository. This dataset contains the number of public bikes rented at each hour in a Seoul bike sharing system. In addition to the number of bikes, the data contains other variables, including weather and holiday information. Our goal will be to build some simple models that predict the number of bikes rented as a function of other attributes available in the dataset.

# Load data from file (you will need to adjust the file path to run locally)

data <- read_csv("lecture-material/week-08/data/SeoulBikeData.csv")## Rows: 8760 Columns: 14

## ── Column specification ─────────────────────────────────────────────────────────────────────────────────

## Delimiter: ","

## chr (4): date, seasons, holiday, functioning_day

## dbl (10): rented_bike_count, hour, temperature, humidity, wind_speed, visibi...

##

## ℹ Use `spec()` to retrieve the full column specification for this data.

## ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.We can look at the attributes of our data:

colnames(data)## [1] "date" "rented_bike_count" "hour"

## [4] "temperature" "humidity" "wind_speed"

## [7] "visibility" "dew_point_temperature" "solar_radiation"

## [10] "rainfall" "snowfall" "seasons"

## [13] "holiday" "functioning_day"Next, we want to convert categorical attributes into proper factors.

# Convert categorical variables into factors:

data$holiday <- as.factor(data$holiday)

data$functioning_day <- as.factor(data$functioning_day)

data$seasons <- as.factor(data$seasons)21.3 Creating training and testing sets

Next, we want to split our full data set into a training set and a testing set. We’ll use the training set to train/build our models, and then we can use the testing set to evaluate the performance of our model on data unseen during training.

# First assign ID to each row to help us make the split.

data <- data %>%

mutate(id = row_number())

# Size (as a proportion) of our training set.

training_set_size <- 0.5

# Use slice sample create a training set comprising a sample of the rows from

# the full dataset.

training_data <- data %>%

slice_sample(prop = training_set_size)

# The testing set should be all of the rows in data not in the training set

# For this, we can use the 'anti_join' dplyr function

testing_data <- data %>%

anti_join(training_data, by = "id")

# Alternatively, we could have used the filter function to do the same thing

# testing_data <- data %>%

# filter(!(id %in% training_data$id))21.4 Training a simple linear model

Next, we’ll use linear regression to estimate a simple model of rented_bike_count as a function of the temperature, rainfall, and hour attributes.

That is, rented_bike_count will be our response variable, and temperature, rainfall, and hour are our predictor attributes.

Note that I picked these variables somewhat arbitrarily, so I do not necessarily have a strong intuition for whether these are good predictor attributes for this particular problem.

Recall from previous lab activities that we can use the lm function to train a linear model in R.

# Train a linear model of rented_bike_count as a function of the temperature,

# rainfall, and hour attributes.

model_a <- lm(

formula = rented_bike_count ~ temperature + rainfall + hour,

data = training_data

)

summary(model_a)##

## Call:

## lm(formula = rented_bike_count ~ temperature + rainfall + hour,

## data = training_data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -1326.88 -295.37 -40.41 239.22 2127.28

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -4.6820 15.8924 -0.295 0.768

## temperature 27.5735 0.6276 43.936 <2e-16 ***

## rainfall -79.5299 6.3631 -12.499 <2e-16 ***

## hour 32.4784 1.0785 30.114 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 491 on 4376 degrees of freedom

## Multiple R-squared: 0.4272, Adjusted R-squared: 0.4268

## F-statistic: 1088 on 3 and 4376 DF, p-value: < 2.2e-1621.4.1 Computing training and testing error

The summary output for our model already gives a lot of useful information about model’s training error (see the Residual standard error).

We can also calculate the training error manually:

# Use the predict function to get the model's output for each row of the

# training data

mA_training_predictions <- predict(

model_a,

data = training_data

)

# Using the training prediction values, we can compute the mean squared error

# for the model on the training data.

mA_training_MSE <- mean(

(training_data$rented_bike_count - mA_training_predictions)^2

)

mA_training_MSE## [1] 240900.1On it’s own, the mean squared error (MSE) for a model is a bit hard to interpret. We can also compute the root mean squared error (RMSE):

sqrt(mA_training_MSE)## [1] 490.8158which gives us a better idea of the average error in the same units as the response variable (i.e., rented_bike_count).

Next, let’s compute the testing error of our model.

# Use the predict function to get the model's output for each testing example

mA_testing_predictions <- predict(

model_a,

data = testing_data

)

# Compute the mean squared error

mA_testing_MSE <- mean(

(testing_data$rented_bike_count - mA_testing_predictions)^2

)

mA_testing_MSE## [1] 608087.3Once again, we can also compute the root mean squared error (RMSE):

sqrt(mA_testing_MSE)## [1] 779.7995Notice how our testing error is much worse than our training error. This should make some intuitive sense: the testing data is unseen data not used during training. We do generally expect the testing error to be worse than the training error.

21.5 Comparing two models

Next, let’s train a different type of model to predict rented_bike_count as a function of temperature, rainfall, and hour.

For this, we’ll train a regression tree (using the rpart) package.

# Use rpart to train a regression tree using the training data

model_b <- rpart(

formula = rented_bike_count ~ temperature + rainfall + hour,

data = training_data,

parms = list(split="information")

)

summary(model_b)## Call:

## rpart(formula = rented_bike_count ~ temperature + rainfall +

## hour, data = training_data, parms = list(split = "information"))

## n= 4380

##

## CP nsplit rel error xerror xstd

## 1 0.23753011 0 1.0000000 1.0001279 0.02564038

## 2 0.15297243 1 0.7624699 0.7751432 0.02059988

## 3 0.04880516 2 0.6094975 0.6263734 0.01846915

## 4 0.04625842 3 0.5606923 0.5785074 0.01761798

## 5 0.03877934 4 0.5144339 0.5368105 0.01665883

## 6 0.03746746 5 0.4756545 0.5195437 0.01651148

## 7 0.01486928 6 0.4381871 0.4664072 0.01542752

## 8 0.01178259 7 0.4233178 0.4258294 0.01488020

## 9 0.01172914 8 0.4115352 0.4208709 0.01494983

## 10 0.01083247 9 0.3998061 0.4206519 0.01496463

## 11 0.01000000 10 0.3889736 0.4090083 0.01469308

##

## Variable importance

## temperature hour rainfall

## 46 41 13

##

## Node number 1: 4380 observations, complexity param=0.2375301

## mean=711.5167, MSE=420581.2

## left son=2 (2536 obs) right son=3 (1844 obs)

## Primary splits:

## temperature < 16.75 to the left, improve=0.23753010, (0 missing)

## hour < 6.5 to the left, improve=0.16976420, (0 missing)

## rainfall < 0.15 to the right, improve=0.04259314, (0 missing)

## Surrogate splits:

## rainfall < 0.05 to the left, agree=0.584, adj=0.012, (0 split)

##

## Node number 2: 2536 observations, complexity param=0.04625842

## mean=441.9972, MSE=188791.1

## left son=4 (1345 obs) right son=5 (1191 obs)

## Primary splits:

## temperature < 5.75 to the left, improve=0.17798520, (0 missing)

## hour < 6.5 to the left, improve=0.14881250, (0 missing)

## rainfall < 0.05 to the right, improve=0.02926475, (0 missing)

## Surrogate splits:

## rainfall < 0.15 to the left, agree=0.555, adj=0.053, (0 split)

## hour < 0.5 to the right, agree=0.532, adj=0.003, (0 split)

##

## Node number 3: 1844 observations, complexity param=0.1529724

## mean=1082.179, MSE=502064

## left son=6 (1172 obs) right son=7 (672 obs)

## Primary splits:

## hour < 15.5 to the left, improve=0.30438070, (0 missing)

## rainfall < 0.05 to the right, improve=0.11650560, (0 missing)

## temperature < 22.65 to the left, improve=0.02495672, (0 missing)

## Surrogate splits:

## temperature < 34.55 to the left, agree=0.638, adj=0.006, (0 split)

## rainfall < 21.5 to the left, agree=0.636, adj=0.001, (0 split)

##

## Node number 4: 1345 observations

## mean=269.5019, MSE=58181.41

##

## Node number 5: 1191 observations, complexity param=0.03746746

## mean=636.7968, MSE=264740.2

## left son=10 (379 obs) right son=11 (812 obs)

## Primary splits:

## hour < 6.5 to the left, improve=0.21890040, (0 missing)

## rainfall < 0.05 to the right, improve=0.08447385, (0 missing)

## temperature < 11.15 to the left, improve=0.02051486, (0 missing)

## Surrogate splits:

## rainfall < 6.5 to the right, agree=0.683, adj=0.005, (0 split)

##

## Node number 6: 1172 observations, complexity param=0.03877934

## mean=786.1672, MSE=243224.2

## left son=12 (440 obs) right son=13 (732 obs)

## Primary splits:

## hour < 6.5 to the left, improve=0.25060510, (0 missing)

## rainfall < 0.05 to the right, improve=0.11510610, (0 missing)

## temperature < 22.65 to the left, improve=0.02286599, (0 missing)

## Surrogate splits:

## rainfall < 0.05 to the right, agree=0.635, adj=0.027, (0 split)

## temperature < 16.95 to the left, agree=0.626, adj=0.005, (0 split)

##

## Node number 7: 672 observations, complexity param=0.04880516

## mean=1598.438, MSE=534151.6

## left son=14 (42 obs) right son=15 (630 obs)

## Primary splits:

## rainfall < 0.25 to the right, improve=0.25047010, (0 missing)

## hour < 22.5 to the right, improve=0.05896777, (0 missing)

## temperature < 24.25 to the left, improve=0.03694892, (0 missing)

##

## Node number 10: 379 observations

## mean=284.4327, MSE=50237.71

##

## Node number 11: 812 observations, complexity param=0.01486928

## mean=801.2623, MSE=279858.4

## left son=22 (55 obs) right son=23 (757 obs)

## Primary splits:

## rainfall < 0.15 to the right, improve=0.12053680, (0 missing)

## hour < 16.5 to the left, improve=0.04475629, (0 missing)

## temperature < 9.75 to the left, improve=0.02317872, (0 missing)

##

## Node number 12: 440 observations

## mean=467.7273, MSE=91872.17

##

## Node number 13: 732 observations, complexity param=0.01172914

## mean=977.5792, MSE=236609

## left son=26 (35 obs) right son=27 (697 obs)

## Primary splits:

## rainfall < 0.3 to the right, improve=0.12475200, (0 missing)

## temperature < 30.4 to the right, improve=0.04264302, (0 missing)

## hour < 11.5 to the left, improve=0.02038190, (0 missing)

##

## Node number 14: 42 observations

## mean=181.8095, MSE=94434.15

##

## Node number 15: 630 observations, complexity param=0.01178259

## mean=1692.879, MSE=420757.9

## left son=30 (64 obs) right son=31 (566 obs)

## Primary splits:

## hour < 22.5 to the right, improve=0.08188262, (0 missing)

## temperature < 33.25 to the right, improve=0.03200264, (0 missing)

##

## Node number 22: 55 observations

## mean=119.8727, MSE=25840.07

##

## Node number 23: 757 observations

## mean=850.7688, MSE=262130

##

## Node number 26: 35 observations

## mean=210.8857, MSE=61640.96

##

## Node number 27: 697 observations

## mean=1016.079, MSE=214395.4

##

## Node number 30: 64 observations

## mean=1140.891, MSE=112396.6

##

## Node number 31: 566 observations, complexity param=0.01083247

## mean=1755.295, MSE=417277.1

## left son=62 (195 obs) right son=63 (371 obs)

## Primary splits:

## hour < 17.5 to the left, improve=0.08449101, (0 missing)

## temperature < 33.25 to the right, improve=0.04753136, (0 missing)

## Surrogate splits:

## temperature < 33.45 to the right, agree=0.686, adj=0.087, (0 split)

##

## Node number 62: 195 observations

## mean=1496.303, MSE=290202.5

##

## Node number 63: 371 observations

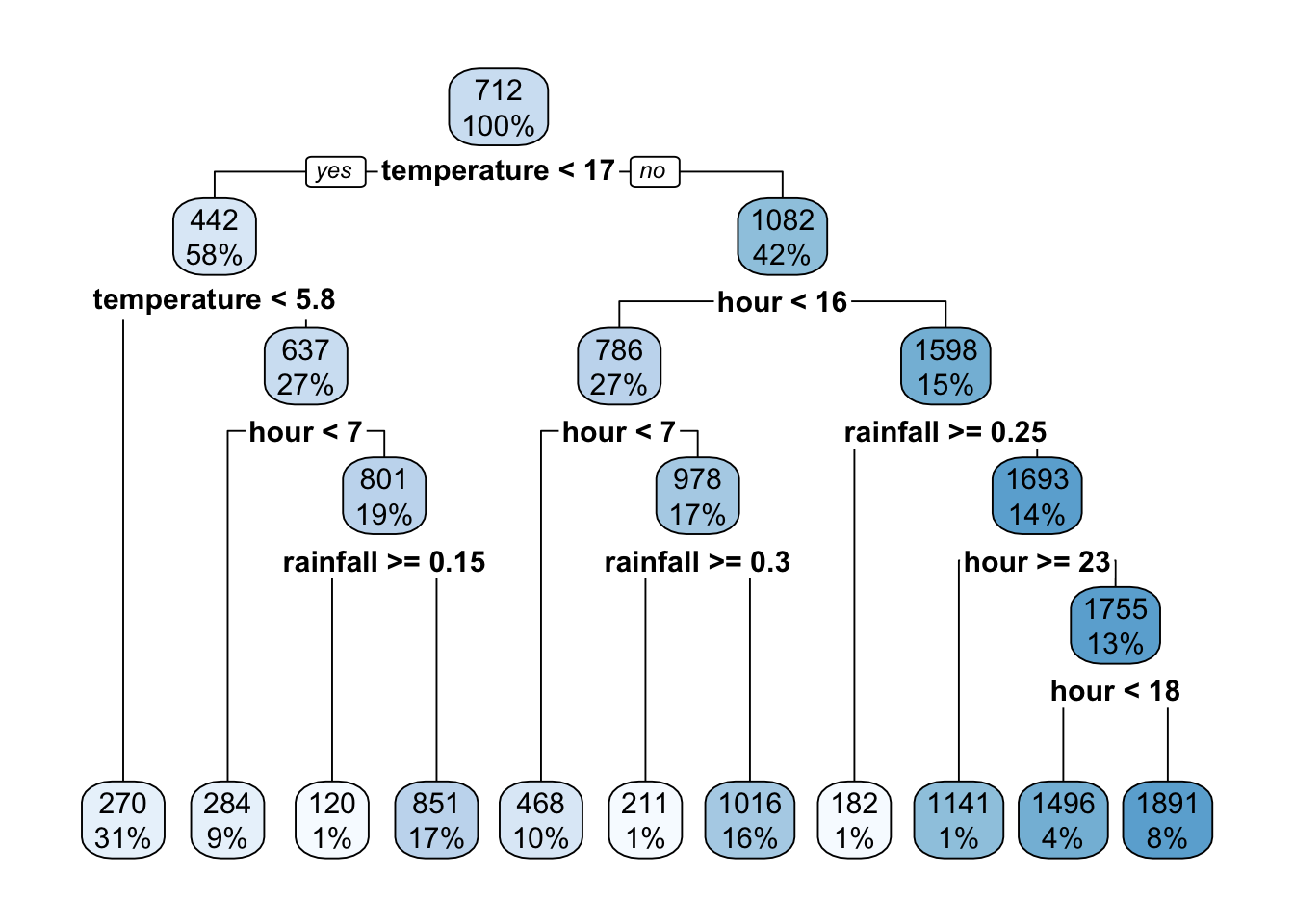

## mean=1891.423, MSE=430281.3We can look at the regression tree visually:

rpart.plot(model_b)

And then we can look more closely at the training error for our regression tree:

# Use the predict function to compute the output of our model for each training

# example

mB_training_predictions <- predict(

model_b,

data = training_data

)

# Compute the mean squared error

mB_training_MSE <- mean(

(training_data$rented_bike_count - mB_training_predictions)^2

)

mB_training_MSE## [1] 163595RMSE (for the training data):

sqrt(mB_training_MSE)## [1] 404.4688If you compare the training error for the regression tree to the training error for our linear model, you’ll see that the regression tree has lower training error. But, training error really isn’t a great way to compare the quality of two models. Remember, we often prefer models that we expect to generalize well to unseen data. Comparing each of the two model’s error on the testing set can give us a better idea of which model might generalize better.

To do so, we’ll need to compute the regression tree’s error on the testing set:

mB_testing_predictions <- predict(

model_b,

data = testing_data

)

# Mean squared error

mB_testing_MSE <- mean(

(testing_data$rented_bike_count - mB_testing_predictions)^2

)

mB_testing_MSE## [1] 689364.4RMSE (for the testing data):

sqrt(mB_testing_MSE)## [1] 830.2797The regression tree’s testing error is much worse than the simple linear model’s testing error, suggesting that the simple linear model might generalize better to unseen data as compared to our regression tree.

21.6 Exercises

- Identify any lines of code that you do not understand. Use the documentation to figure out what is going on.

- We did not use all of the attributes in the bike rental data set to build our models. Are there attributes that we didn’t use that you think would be useful to include in a predictive model?

- Try building a couple of different models using the predictor attributes that you think would be most useful. How do their training/testing errors compare?

- The

modelrpackage has all sorts of useful functions for assessing model quality. Read over themodelrdocumentation: https://modelr.tidyverse.org/. Try using some of the functions to compute different types of training/testing errors for the models we trained in this lab activity. - Think about the structure of the dataset. What kinds of issues might run into when we randomly divide the data into a training and testing set? For example, are we guaranteed to have a good representation of every hour of the day in both our training and testing data if we split the data randomly? What other sampling procedures could we use to perform a training/testing split that might avoid some of these issues?

21.7 References

Dua, D. and Graff, C. (2019). UCI Machine Learning Repository http://archive.ics.uci.edu/ml. Irvine, CA: University of California, School of Information and Computer Science.